In almost every integration implementation we have the need to replicate Oracle Fusion data to transformation layer where we will be doing Validations of FBDI files data before importing to Oracle Fusion Using OIC.

To Replicate data in transformation layer there are multiple ways one them is usi merge operation of DB adapter.

Below are the high level step performed when we are doing through OIC Using DB Adapter merge operation:

1). Create a replication extract(BI Report) in Fusion

2). Create an ESS job in Fusion (If we have large volume of data otherwise call the Report directly)

3). Either use Bursting in BI Publisher to burst the output to some sftp or UCM

Or Use ExportBulkdata operation of ErpIntegrationService web service (This would automatically

upload the output file to UCM (This to be done in OIC)).

4). Create a scheduled integration , which will submit the ESS job in Fusion, create an app driven

integration which will receive the callback from Fusion when the job is completed.

5). Download the Zip file as attachment(it supports upto 1 GB) ->extract the csv output file from it

6). Read CSV output using stage file in segments(as the volume is high), use the report

csv output as schema.

7). Now configure the DB adapter to use Merge Operation (UPSERT) in a particular table

8). Map the fields, save the integration , activate and run the integration.

--------------------------------

Issue with Null field in Mapping

---------------------------------

There will be some fields in the report output which will be null.

Now when we use the merge operation, the null fields are ignored, rest other fields are inserted/updated in that row.

----------------------------------------------------------------

Let us take a real time scenario:

We want to replicate "Supplier Site" from Fusion to transformation layer.

At first if the supplier site is inactive, then the inactive date filed will not be "Blank or Null" in Fusion it will have some date value.

When we replicate the above data, it will insert row correctly.

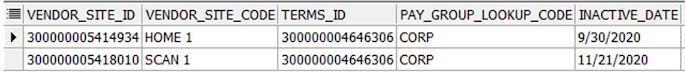

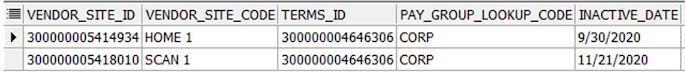

below is screenshot of the first time merge operation in table:

We can see the inactive date is not empty:

Now when we activate the HOME 1 site then the inactive date field in Fusion is set to "Blank or Null" in Fusion.

If we do merge now using DB adapter in OIC this null will be ignored it will have the old inactive date. Rest other field will be updated.

Below is the screenshot from Fusion:

Below is the screenshot from DB after Merge:

We can see that other fields like last update date etc. is updated but inactive field is still having old date. It should have been Null

Now let us do another update in Fusion. We will rename HOME1 to HOME in Fusion.

Below is the screenshot from Fusion:

Now when we do MERGE again using DB adapter in OIC. All the fields will get updated except the inactive field. The inactive filed will still be holding old data.

Below is the screenshot from DB after MERGE

==============================================================

Now since we know the issue let us find out why it is happening.

We are reading file in segment( using CSV schema) and mapping to DB adapter fields, it doesn't pick the null filed for update/insert.

By default DetectOmissions in DB adapter is set to true. Which forbids to take null fields for merge operation.

If we see the XSL mapper for DB adapter we will notice that it has not set the inactive date field to Nillable True.

By default the mapper for Inactive date or any other field will be like below:

We can see that, by default the nillable true is not mentioned for the fields.

There can be many solutions to resolve this issue. We will see 3 of the common ones.

1). When we activate the site then put a large value for date in Fusion instead of keeping it blank.

This will avoid the code change in report or OIC side.

The only problem with this is that the user has to remember to put large value to inactive date

instead of keeping it as blank.

2). Setting "DetectOmissions" to false DB adapter .jca file to false. Export the integration and extract the .iar file. look for the DB adapter(Where merge operation is

being done) under resources. Look for the .jca file as shown in the below screenshot:

Open the .jca file and seacrh for "DetectOmissions" property. By default it will be set to "true".

Set the "DetectOmissions" property to false. Import the integration. The null filed will be taken care of now.

3). Changing in XSL mapper of DB adapter.Export the integration, find the exact mapper from the iar file and edit it by placing below text for

inactive date field or wherever we think it can be null. import the mapper i integration.

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:nil="true"

Below is the screenshot for the same from modified mapper:

------------------------------------------------------------------------------------------------------------------

Sample mapper request to MERGE before making the change in mapper:

We can see the inactive date is not set to nillable true

Below is the sample mapper request to MERGE after making the change: